ELMo

ELMo (which stands for Embeddings from Language Model) is one of the new State-of-the-art technique to make word embeddings. I was trying to get the main principle and now I present a few highlights which were very useful for me to comprehend that.

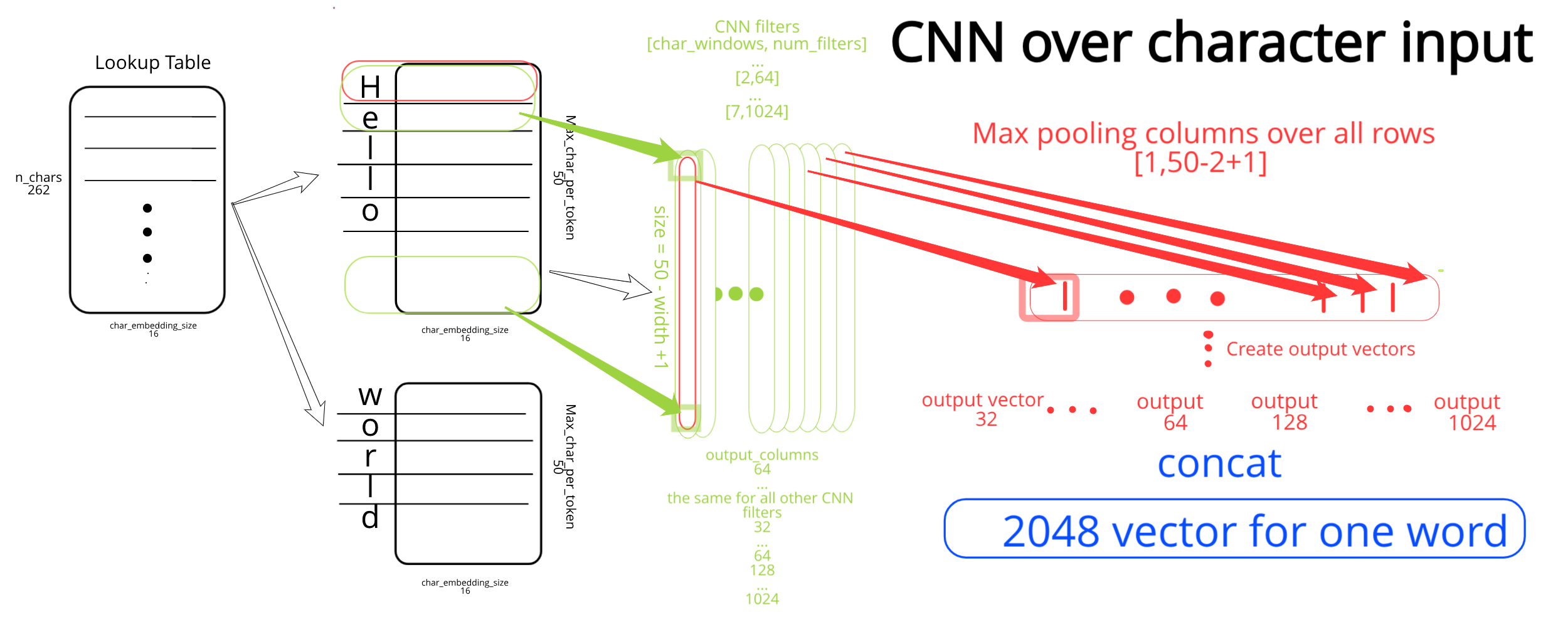

- ELMo’s inputs are characters rather than words. They can thus take advantage of sub-word units to compute meaningful representations even for out-of-vocabulary words (like FastText).

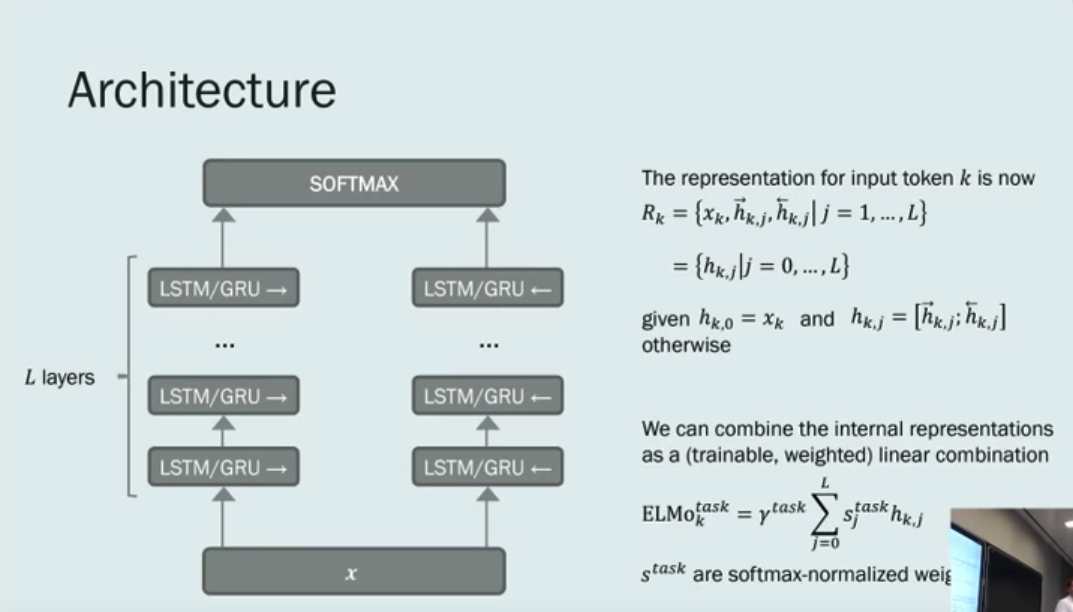

- ELMo are concatenations of the activations on several layers of the biLMs. Different layers of a language model encode a different kind of information

Size of char embeddings vocabulary is 262 chars because:

- char ids 0-255 come from utf-8 encoding bytes

- assign 256-300 to special chars

- self.bos_char = 256 # begin sentence

- self.eos_char = 257 # end sentence

- self.bow_char = 258 # begin word

- self.eow_char = 259 # end word

- self.pad_char = 260 # padding

The UnicodeCharsVocabulary that converts token strings to lists of character ids always uses a fixed number of character embeddings of n_characters=261, so always set n_characters=261 during training.

However, for prediction, we ensure each sentence is fully contained in a single batch, and as a result pad sentences of different lengths with a special padding id. This occurs in the Batcher see here. As a result, set n_characters=262 during prediction in the options.json.

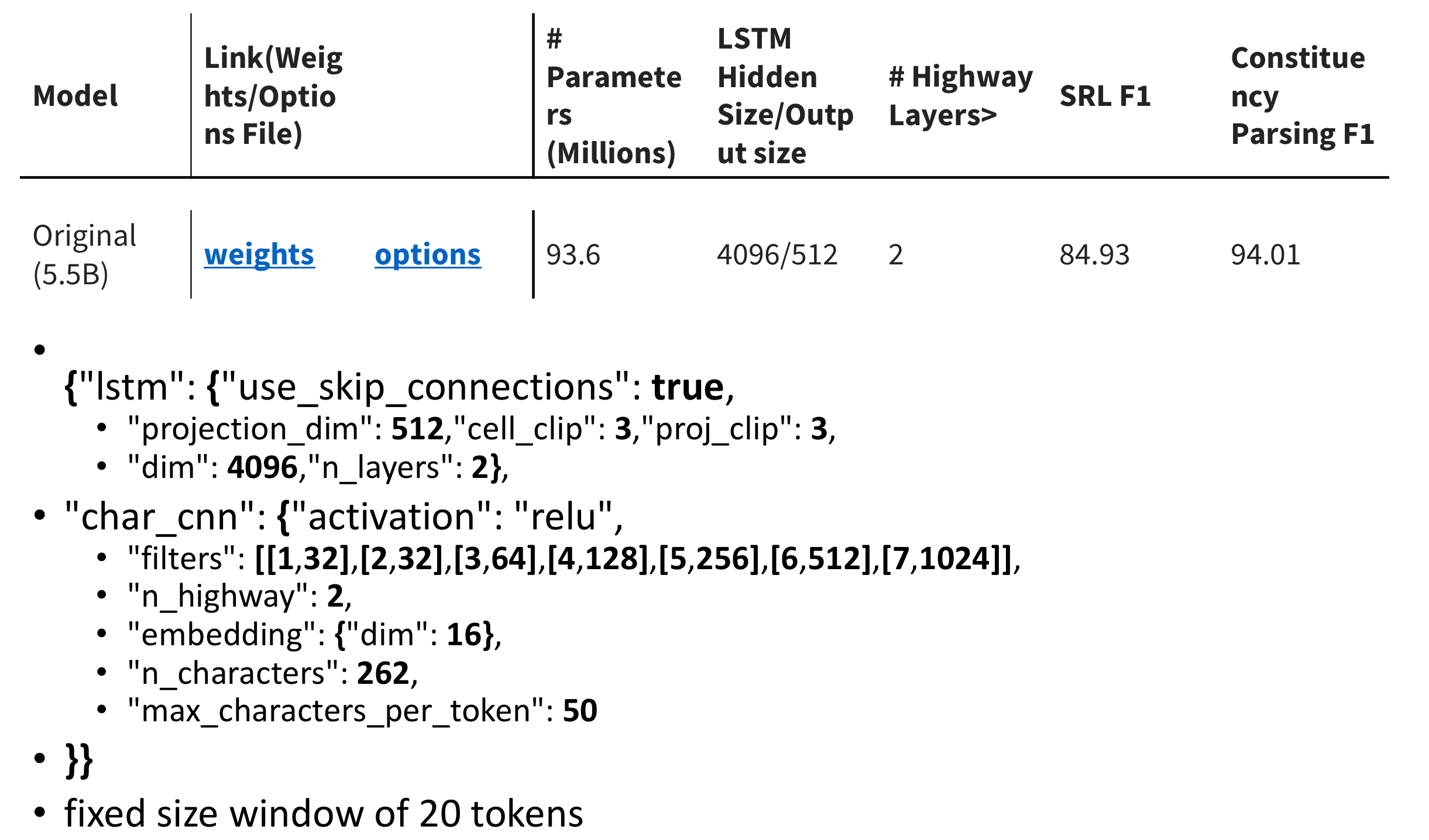

The screenshot is taken from a video, which is also trying to describe ELMo architecture. I think it is worth to look but I miss there a more detailed part about CNN over chars. On the other side, it nicely describes how the bi-LSTM part works.

The softmax is over the vocabulary which is 793 471 tokens big.

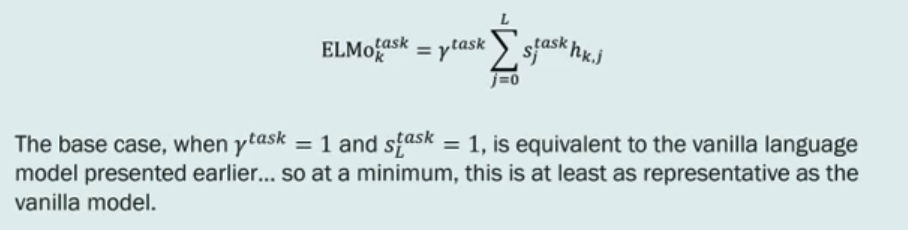

The principle is to train that model on a big corpus and then fine-tuned multiplying parameters on task-specific data. The final embedding is a combination of the outputs of all the layers multiplied by their multiplying parameters and then average them.

Other notes:

- 3 GTX 1080 for 10 epochs, taking about two weeks.

- The model was trained with a fixed size window of 20 tokens.

- The batches were constructed by padding sentences with <S> and <\/S>, then packing tokens from one or more sentences into each row to fill completely fill each batch.

- converts token strings to lists of character ids always uses a fixed number of character embeddings

- Partial sentences and the LSTM states were carried over from batch to batch so that the language model could use information across batches for context, but backpropagation was broken at each batch boundary.

You can look at their results at their pages