Action on Google with Dialogflow

I have been focusing on Action on Google (AoG), Dialogflow and Firebase for the last few weeks. Thanks to that I create a simple presentation and this blog post about the differences between Action on Google and Dialogflow. How to use them and how to connect them into one system based on device Google Home (or another Google Assistant enabled device).

Presentation

PDF presentation with attempt for easy-to-understand explanation of difference between AoG and Dialogfrom systems.

Pure Firebase function

-

After uploading code (command firebase deploy) you will find endpoint URL for the function in Firebase Console in a format like “https://us-central1-…cloudfunctions.net/readWord”. You can use it even for usecases not related to AoG or Dialogflow. There is an easy integration with Firebase Realtime database (see example below) or Firebase Cloud Firestore.

const admin = require('firebase-admin'); admin.initializeApp(); exports.readWord = functions.https.onRequest((req, res) => { // Attach an asynchronous callback to read the data at our posts reference return admin.database().ref("/words/").once("value", function(snap) { var words = ""; snap.forEach(function (childSnap) { console.log(childSnap.val()); words += childSnap.val().original + " "; }); return res.status(200).send(`<!doctype html><head><title>OK</title></head><body>${words}.</body></html>`); }); })

DialogFlow type function

-

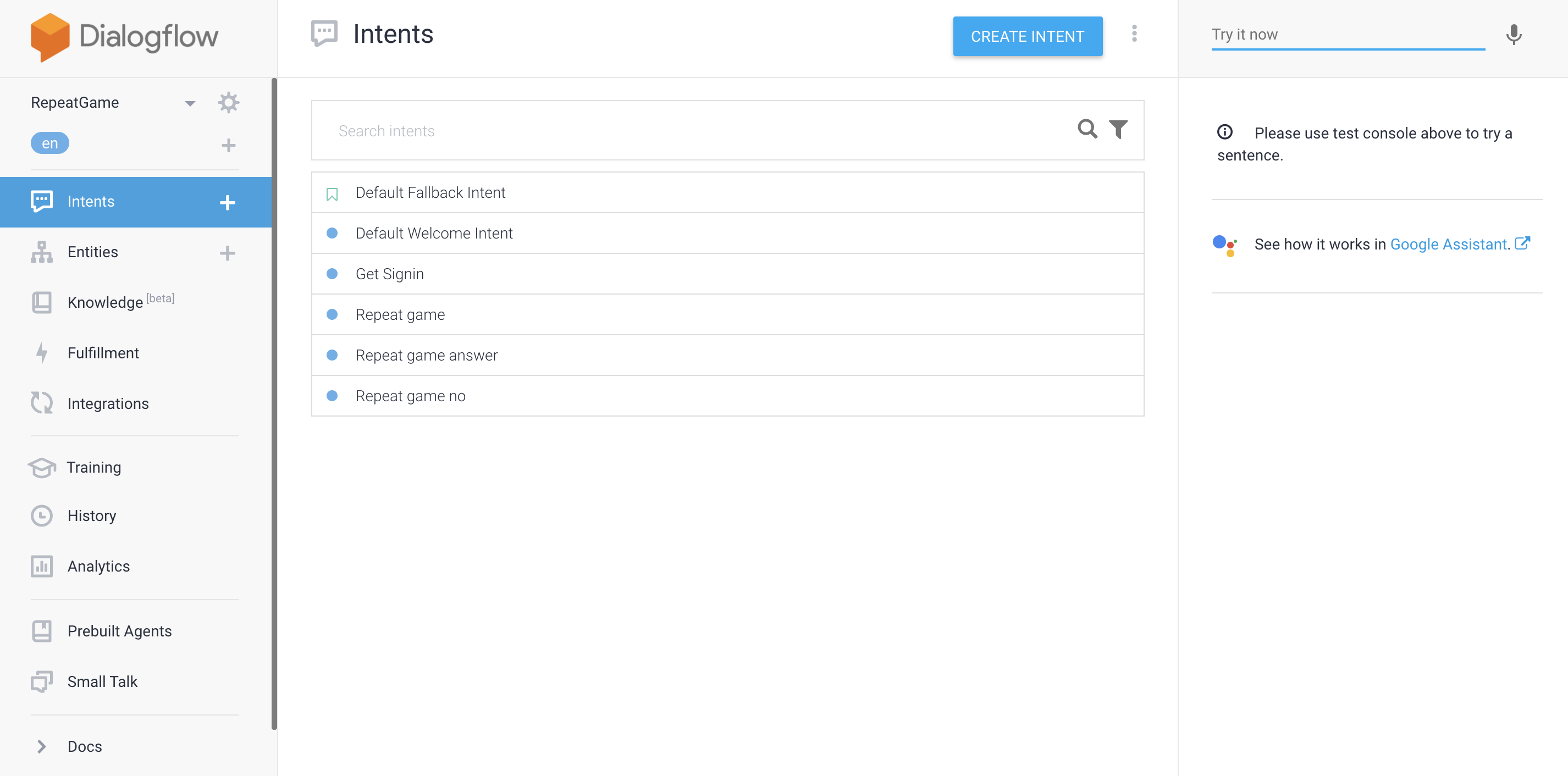

“Default Welcome Intent” has to match intent in Dialogflow Console

const functions = require('firebase-functions'); const {dialogflow} = require("actions-on-google") const {SignIn} = require('actions-on-google'); const app = dialogflow({ clientId: "..." }); process.env.DEBUG = 'dialogflow:debug'; // enables lib debugging statements app.intent("Default Welcome Intent", conv => { if (typeof conv.user.storage.userId === 'undefined' || conv.user.storage.userId === null){ conv.ask(new SignIn()); } else { var msg = `Hi ${conv.user.storage.payload.name}! Let's train you memory. Are you ready?` log_to_firebase(msg, conv.user.storage.userId); conv.ask(msg); } }); exports.dialogflowFirebaseFulfillment = functions.https.onRequest(app); -

In some sources you can find implementation with WebhookClient - but this approach seems to have worse documentation than the approach above. On the other hand, it is easy to log all messages because you have access to all headers/body which is coming from Google assistant { request, response }. The map (String to Function) has to follow the name of the intent defined in your Dialogflow Client.

const functions = require('firebase-functions'); // Dialogflow stuff const {WebhookClient} = require('dialogflow-fulfillment'); const {Card, Suggestion} = require('dialogflow-fulfillment'); exports.dialogflowFirebaseFulfillment = functions.https.onRequest((request, response) => { const agent = new WebhookClient({ request, response }); console.log('Dialogflow Request headers: ' + JSON.stringify(request.headers)); console.log('Dialogflow Request body: ' + JSON.stringify(request.body)); function cardTitle(agent) { agent.add(new Card({ title: `Card title`, imageUrl: 'https://...png', text: `This is the body text of a card`, buttonText: 'This is a button', buttonUrl: 'https://assistant.google.com/' }) ); agent.add(new Suggestion(`Quick Reply`)); agent.add(new Suggestion(`Suggestion`)); } function greetings( agent ) { agent.add('Hello world!'); } function repeatAfterMe(agent) { ... } let intentMap = new Map(); intentMap.set('Card Title', cardTitle); intentMap.set('Assistant Handler', googleAssistantHandler); intentMap.set('Default Welcome Intent - yes', repeatAfterMe); agent.handleRequest(intentMap); });

Action on Google type function

-

‘actions.intent.TEXT’ is AoG specific intent (see PDF presentation)

const functions = require('firebase-functions'); const { actionssdk } = require('actions-on-google'); const { NewSurface } = require('actions-on-google'); const app = actionssdk() app.intent('actions.intent.TEXT', (conv, input) => { console.log(conv); if (input === 'bye' || input === 'goodbye') { return conv.close('See you later!'); } const context = 'Sure, I have mobile app for you.'; const notification = 'Start registration app'; const capabilities = ['actions.capability.SCREEN_OUTPUT']; if (conv.available.surfaces.capabilities.has('actions.capability.SCREEN_OUTPUT')) { return conv.ask(new NewSurface({context, notification, capabilities})); } else { return conv.close("Sorry, you need a phone to continue with registration."); } }) exports.dialogflowFirebaseFulfillment = functions.https.onRequest(app);

User object

The user object contains information about the user, including a string identifier and personal information like email and location (to access it is required ask for permissions). To get a User object, you need to access conv.user.

Notes

- You cannot distinguish between several Google Assistant devices registered under the same account - reason behind it may be to provide “one-big-ecosystem” feeling and ability to continue with the conversation on all your devices

- If you are on the same WIFI network you can use open Google Home API (there is a possibility that it won’t be “open” for a long time). To get the information you can send GET/POST request on the IP address of your device (can be found in settings or if you don’t have access to it then just with brute force - ping all possible address in your local network)

- Google Home API documented by rithvikvibhu

- Google Home API documented by rithvikvibhu (in Apiary)

- You can get name, language, settings, timezones and much more information about the device

- How to design user-like conversation

- Possible responses on devices with display capabilities (it there is only sound capabilities then you can use Speech Synthesis Markup Language (SSML))