Humal-Level AI 2018

These last few days I was attending Human Level AI conference. On one side just because of the curiosity to see what is hype right now in an AGI (Artificial General Intelligence). On the other side because I like networking and meeting new people. Unfortunately the ticket price was not student-friendly so I became a volunteer to get on the conference for free and get at least few looks on some presentations. It was very interesting experience because almost all speakers had very good level of knowledge and were experts in theirs fields.

I will try to do more or less subjective highlights from this conference, which last for 4 days and was held at Dejvice and then in Hybernia theater.

Highlights

BICA + Keynotes (22.8)

- monistic idealist - consciousness is the ground of all being. Mind creates matter, and the physical world does not objectively exist outside of the mind -> living in a dream created by our mind

-

mechanistic monism - brains is created of interconnected components, where each component performs some function within the overall system, and consciousness is only computational sum of all this parts

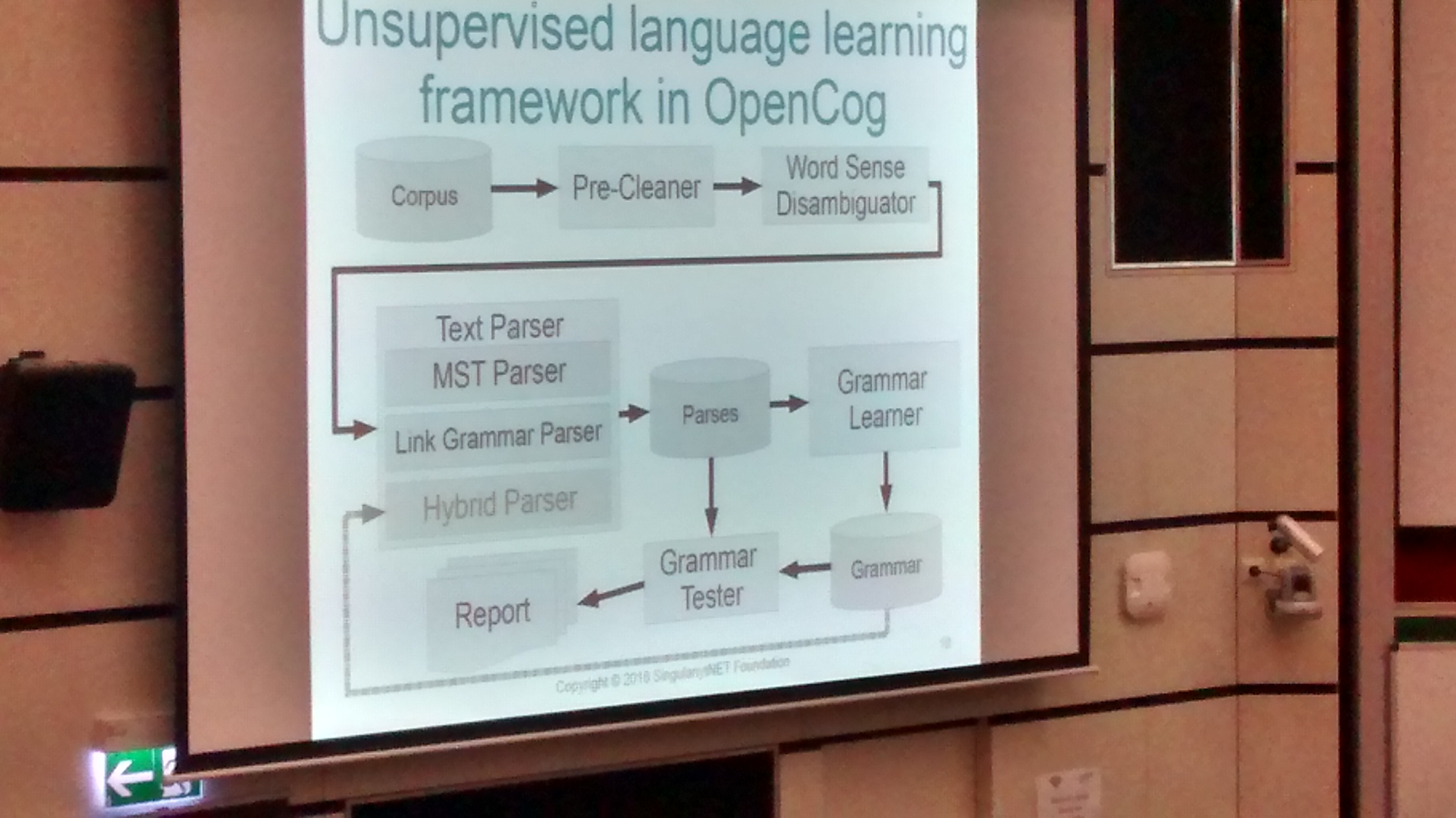

- OpenCog project

- multiple algorithms working in cooperation

- part of a SingularityNet

- AtomSpace

- SingularityNet

- blockchain-based network for trading/exchanging/developing

- Ben Goertzel named his child according to algorithms for AGI. He said that if he won’t create AGI then it is destiny of his child to do so because of the name.

- Robot meditation instructor - for some people it is more suitable than human - it mirror human expression/voice/rhytms

- It is better to have companies like GoodAI than the same aim in military

- Video about a incrementally learning RBM

- Book recommendation from Tomas Mikolov

BICA + Keynotes (23.8)

- Representing sets as summed semantic vectors

- Better approach to do summing of embeddings for word’s vector, because when you do average of more than 2 vectors, you lose an information about original vectors

- Suitable for classifying if a set of words is similar to other set of words

- VisualGenome

Keynotes (24.8)

- Turing machines - change input and it changes output

- **Super Turing machines **- change input and it changes program

- Core capabilities of the DARPA’s L2M system

- continual learning

- knowing when to learn and when not

- adaption to new task

- goal-driven

- Examples:

- Albert Einstein: “Once you stop learning, you start dying”

- Minimum spanning tree

- The cost of the spanning tree is the sum of the weights of all the edges in the tree. There can be many spanning trees. Minimum spanning tree is the spanning tree where the cost is minimum among all the spanning trees. There also can be many minimum spanning trees.

- Minimum spanning tree has direct application in the design of networks. It is used in algorithms approximating the travelling salesman problem, multi-terminal minimum cut problem and minimum-cost weighted perfect matching. Other practical applications are:

- Cluster Analysis

- Handwriting recognition

- Image segmentation

Future of AI (25.4)

- Humans can with less data do much more than humans -> we should focus not on getting as much as possible but to be able generalize what we already know

- SCAN: Learning Abstract Hierarchical Compositional Visual Concepts

- Integrated Information Theory

- Integrated information theory (IIT) attempts to explain what consciousness is and why it might be associated with certain physical systems. Given any such system, the theory predicts whether that system is conscious, to what degree it is conscious, and what particular experience it is having.

- Creator of PicBreeder show some of the best pictures created in that tool.

- Minimal Criterion Coevolution: A New Approach to Open-Ended Search

- Learning to Learn without Gradient Descent by Gradient Descent

Representing sets as summed semantic vectors

Representing meaning in the form of high dimensional vectors is a common and powerful tool in biologically inspired architectures. While the meaning of a set of concepts can be summarized by taking a (possibly weighted) sum of their associated vectors, this has generally been treated as a one-way operation. In this paper we show how a technique built to aid sparse vector decomposition allows in many cases the exact recovery of the inputs and weights to such a sum, allowing a single vector to represent an entire set of vectors from a dictionary. We characterize the number of vectors that can be recovered under various conditions and explore several ways such a tool can be used for vector-based reasoning.